[译] Prompt Engineering: 循循善诱

写在前面

ChatGPT 问世以来,这个世界发生了翻天覆地的变化,有人说,所有应用都值得被重新做一遍,这个话虽然夸张,但是一点都不为过。因为从交互的角度来说,人机交互模式有一个转折性的变化。对话式的交互大大缩短了获得信息的时间,也缩短了人机交互的时间。从这个角度来说,把所有应用重新审视交互就毫不为过。所以微交互做了十年来的一个重大决定就是改了品牌名称,“AI Interface” 希望接下来的,会着重 AI 交互领域,以及对话式交互,大语言模型带来的改变等方向多输出一些文章,希望大家持续的关注。

前言

自从GPT3问世以来,“预训练+微调”的模式被广泛应用在各类大模型的下游任务中—而这套模式最突出的问题在于微调的代价还是太高,对于中小型企业来讲,技术难度和成本都太大,不仅需要大量的数据去进行支持,同时最终的效果也是难以保证的。

Prompt engineering的技术日趋成熟,提供了解决这种问题的另一个思路,本文是翻译一篇国外的关于Prompt Learning的一篇文章,就关于手工设计prompt以及实际的一些测试结果提供参考,译者旨在借以此文从工业界的角度,而非学术界的角度解读prompt engineering的实际应用场景。

原文地址

https://docs.cohere.ai/prompt-engineering-wiki/docs.cohere.ai/prompt-engineering-wiki/

Prompt Engineering

在接触任何一个新概念的时候,很多人可能有说文解字的强迫症—engineering倒是好说,prompt在中文语境下作何理解比较好呢?

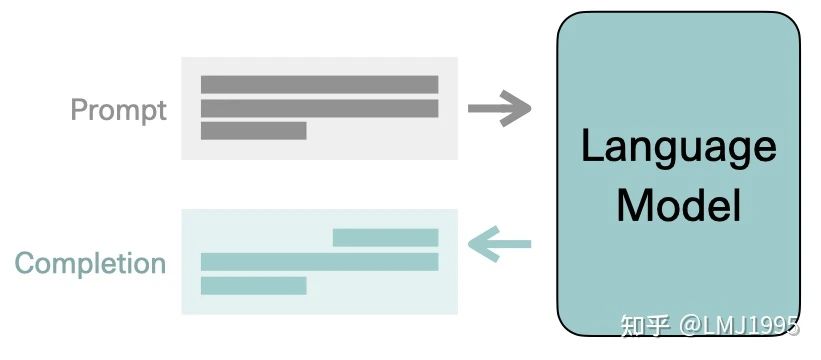

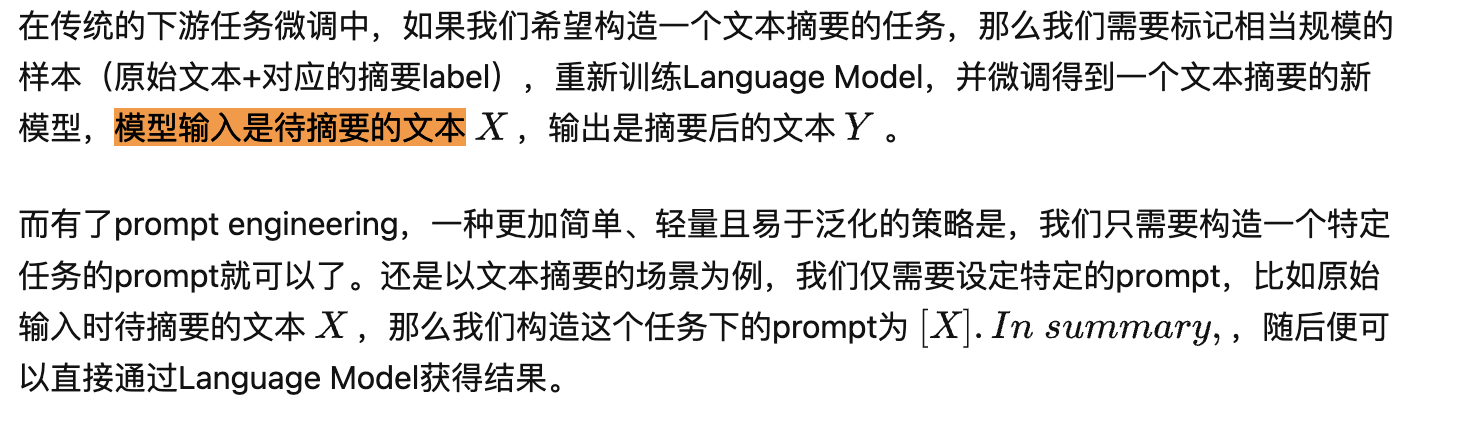

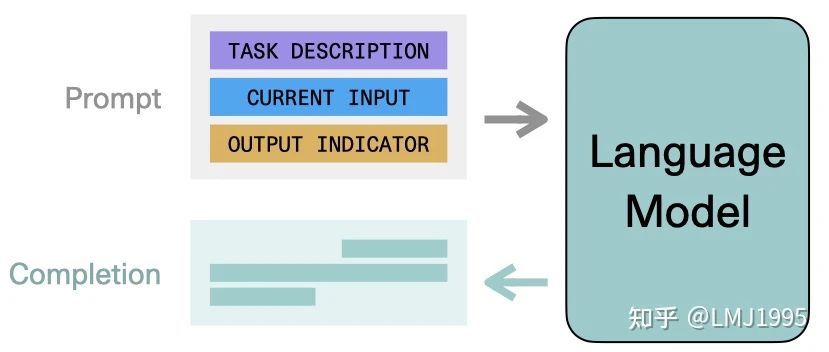

直接翻译的话,prompt可以被理解为“指示,提示”,但是所谓“指示工程”或者“提示工程”这种字眼也是不知所云,与其死抠字眼,不如通过下图理解prompt engineering背后的逻辑:简单来说,prompt其实就是一种对于特定任务去精心构造的输入。

Try multiple formulations of your prompt to get the best generations

在generation task中,尝试多种不同的prompts来解决问题通常是非常有帮助的。

对于人类来讲,同一个prompt的不同表达方式区别并不大,但对于模型来说,最终得到的generation可能千差万别—在模型执行训练的数据中,不同表达可能来自于不同的文本语境并用于不同目的,最终的结果往往是非常难以预测的。

比如在上面的这个文本摘要的任务,我们可以尝试

- In summary, …

- To summarize in plain language, …

- The main point to take from this article is that …

预测prompt的不同表达方式产生的结果是比较困难的,但是尽管如此,事实上也还是有一些手段可以在手工漫无目的尝试prompt之外,提供具体prompt改进的方向和参考,本文中不过多展示,可以参考文末链接的相关文章自行查阅。

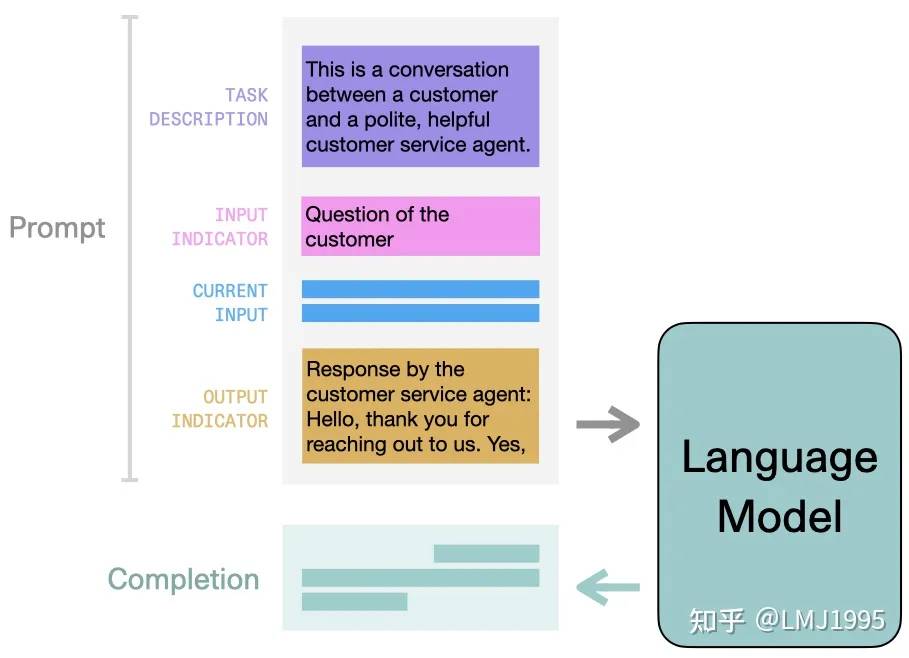

Describe the task and the general setting

在输入结尾提供特定的任务描述固然是一种prompt的设计思路,但prompt的设计确不止如此。在整个输入的前后添加任务的更多描述和信息通常也是极其有帮助的—还是对于上面的文本摘要任务,我们甚至可以进一步来添加更多细节

我们这里考略一些更复杂的场景,比如智能问答中,我们希望模型能够为顾客提供通情达理而又彬彬有礼的详细服务,客户的问题可能是

Hi, I'd like a refund for the coffee maker I ordered. Would that be possible?

基于类似这样的输入,要如何去设计prompt呢?直觉上,首先最容易想到的是提供一个合适的“人设”—polite且helpful的service agent。

This is a conversation between a customer and a polite, helpful customer service agent.

随后我们将顾客实际输入的问题填充进去,同时通过这种格式,我们向模型进一步明确了场景,客户当前抛出了一个问题

Question of the customer: Hi, I'd like a refund for the coffee maker I ordered. Would that be

随后我们告知模型,需要反馈给客户一个肯定的回答

Response by the customer service agent: Hello, thank you for reaching out to us. Yes,

通过这种prompt,我们建立了一个问答的完整上下文逻辑关系,提供了建立在用户和服务生之间的语境,同时我们希望给与肯定的答复。将上述全部内容组装起来之后,我们最终得到了如下的prompt

This is a conversation between a customer and a polite, helpful customer service agent.

Question of the customer: Hi, I'd like a refund for the coffee maker I ordered. Would that be possible?

Response by the customer service agent: Hello, thank you for reaching out to us. Yes,

将上述的模型提供进入预演模型的输入中,我们可以得到如下的答复内容

- Yes, we are able to accept returns if the product is unused and unopened.

- Yes, we are happy to refund your purchase. However, we do require you to return the item to our store for a full refund.

- Yes, we can do that. Please send us a message with your name, phone number, and the reason for the refund. We will get back to you as soon as possible.

通过上述的这种方法和prompt设计,我们得到了几乎以假乱真的文本生成结果!

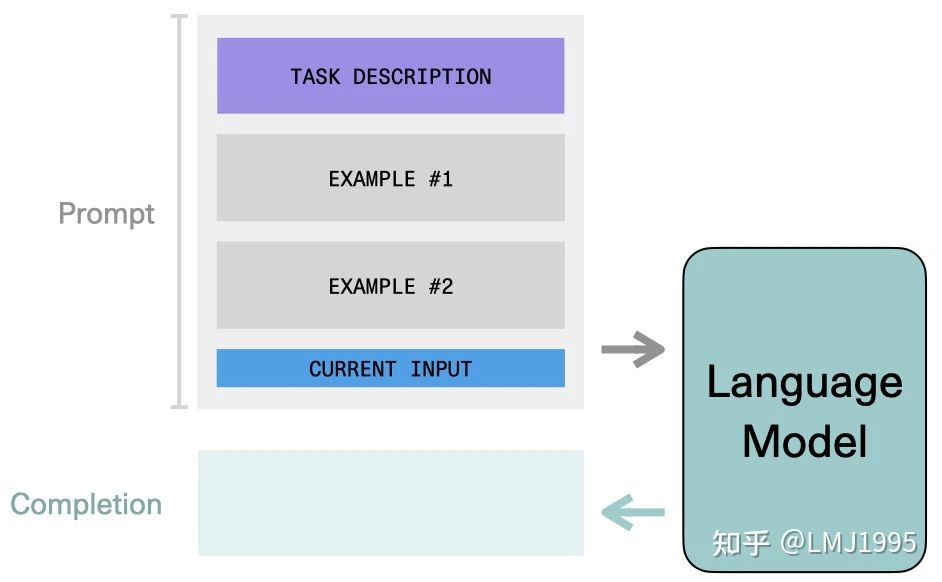

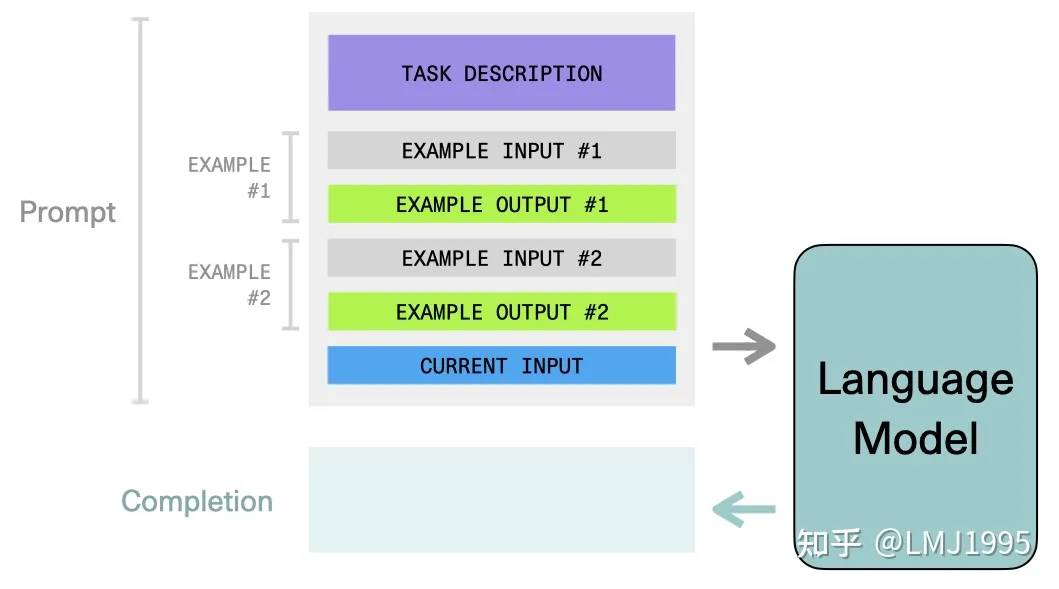

Show the model what you would like to see

除此之外,另外一个设计prompt的思路是在输入中提供期望生成的内容的范例—这种方法被称为few-shot learning。

我们来看如下示例,对于一个文本分类任务,我们希望模型能将不同的文本分成postive/negative/neutral三类,比如给定以下输入

Review: "I really enjoyed this movie!"

This sentiment of this review is

一个真实的输出是(基于一个真实模型得到的结果)

This sentiment of this review is apt, considering the movie's plot,

这显然不是我们所期待的结果,对于这个场景,我们希望只需要做一个positive/negative/neutral三选一的选择题就可以了,而Language Model生成了一大堆话—我们当然可以外接一个全连接层,按照BERT之类的思路去进行微调,但是我们来看prompt learning会怎么做:

通过设置这样的prompt,在prompt中包含我们期望的输入和输出,以这种方式告诉模型我们想要的输出格式是什么样的—经过prompt扩展之后,输入为

This is a movie review sentiment classifier.

Review: "I loved this movie!"

This review is positive.

Review: "I don't know, it was ok I guess.."

This review is neutral.

Review: "What a waste of time, would not recommend this movie."

This review is negative.

Review: "I really enjoyed this movie!"

This review is

模型很容易就输出了positive这样的结果。

Examples

我们通过下面的两个任务来进一步应用prompt。

Keyword Generation

在这个示例中,我们希望通过prompt engineering来完成keyword generation(关键字摘要)任务,即给定一段话,找到一个词来代表一段话中最核心的概念。

我们定义了这样的一个prompt,在prompt的开头进行简单的声明,随后提供了两个范例(来自于John von Neumann / Feminism, Wikipedia),并最终输入Wikipedia中对Python的一段描述进行测试

This is a bot that automatically finds the most important keyword for a given text passage.

Text: “John von Neumann (/vɒn ˈnɔɪmən/; Hungarian: Neumann János Lajos, pronounced [ˈnɒjmɒn ˈjaːnoʃ ˈlɒjoʃ]; December 28, 1903 – February 8, 1957) was a Hungarian-American mathematician, physicist, computer scientist, engineer and polymath. Von Neumann was generally regarded as the foremost mathematician of his time[2] and said to be “the last representative of the great mathematicians”.[3] He integrated pure and applied sciences.”

Most important key word: “John von Neumann”

Text: “Some scholars consider feminist campaigns to be a main force behind major historical societal changes for women’s rights, particularly in the West, where they are near-universally credited with achieving women’s suffrage, gender-neutral language, reproductive rights for women (including access to contraceptives and abortion), and the right to enter into contracts and own property.[9] Although feminist advocacy is, and has been, mainly focused on women’s rights, some feminists argue for the inclusion of men’s liberation within its aims, because they believe that men are also harmed by traditional gender roles.[10] Feminist theory, which emerged from feminist movements, aims to understand the nature of gender inequality by examining women’s social roles and lived experience; feminist theorists have developed theories in a variety of disciplines in order to respond to issues concerning gender.”

Most important key word: “Feminism”

Text: “Guido van Rossum began working on Python in the late 1980s, as a successor to the ABC programming language, and first released it in 1991 as Python 0.9.0.[31] Python 2.0 was released in 2000 and introduced new features, such as list comprehensions and a garbage collection system using reference counting and was discontinued with version 2.7.18 in 2020.[32] Python 3.0 was released in 2008 and was a major revision of the language that is not completely backward-compatible and much Python 2 code does not run unmodified on Python 3.”

Most important key word:

Bingo!模型很快地反馈“Python”作为最终的结果—当然模型也偶尔返回“Guido van Rossum”,倒也情有可原,毕竟答案不是唯一的嘛~

Example Generation

另一个常见的任务是根据给定的描述进行扩写,对于这一任务设计prompt也是很轻松的

This is a list of ideas for blog posts for tourists visiting Toronto:

1. The best sights to see in Toronto

2. My favourite walks in Toronto

我们最终很容易就得到了如下的这样的内容

3. An overview of Toronto

4. Toronto events

5. Restaurants in Toronto

6. Shopping in Toronto

7. Travel tips for Toronto

8. Sightseeing in Toronto

9. What to do in Toronto

Have fun!

参考链接

关于prompt engineering,如果希望获得更深入的理解,可以看这两篇文章

https://zhuanlan.zhihu.com/p/488279606

https://zhuanlan.zhihu.com/p/395795968

文章由LMJ1995翻译,作者专栏地址:

https://zhuanlan.zhihu.com/p/526299013

原文:

https://docs.cohere.ai/prompt-engineering-wiki/docs.cohere.ai/prompt-engineering-wiki/

来都来了,说些什么?